40 tf dataset get labels

Using the tf.data.Dataset | Tensor Examples # create the tf.data.dataset from the existing data dataset = tf.data.dataset.from_tensor_slices( (x_train, y_train)) # by default you 'run out of data', this is why you repeat the dataset and serve data in batches. dataset = dataset.repeat().batch(batch_size) # train for one epoch to verify this works. model = get_and_compile_model() … A hands-on guide to TFRecords - Towards Data Science To get these {image, label} pairs into the TFRecord file, we write a short method, taking an image and its label. Using our helper functions defined above, we create a dictionary to store the shape of our image in the keys height, width, and depth — w e need this information to reconstruct our image later on.

GitHub - google-research/tf-slim Furthermore, TF-Slim's slim.stack operator allows a caller to repeatedly apply the same operation with different arguments to create a stack or tower of layers. slim.stack also creates a new tf.variable_scope for each operation created. For example, a simple way to create a Multi-Layer Perceptron (MLP):

Tf dataset get labels

Predict cluster labels spots using Tensorflow - Read the Docs We create a vector of our labels with which to train the classifier. In this case, we will train a classifier to predict cluster labels obtained from gene expression. We'll create a one-hot encoded array with the convenient function tf.one_hot. Furthermore, we'll split the vector indices to get a train and test set. GitHub - jahongir7174/YOLOv5-tf: YOLOv5 implementation using ... Apr 27, 2021 · YOLOv5 implementation using TensorFlow 2. Train. Change data_dir, image_dir, label_dir and class_dict in config.py; Choose version in config.py; Optional, python main.py --anchor to generate anchors for your dataset and change anchors in config.py How to use Dataset in TensorFlow - Medium dataset = tf.data.Dataset.from_tensor_slices (x) We can also pass more than one numpy array, one classic example is when we have a couple of data divided into features and labels features, labels = (np.random.sample ( (100,2)), np.random.sample ( (100,1))) dataset = tf.data.Dataset.from_tensor_slices ( (features,labels)) From tensors

Tf dataset get labels. tfds.visualization.show_examples | TensorFlow Datasets TensorFlow Datasets Fine tuning models for plant disease detection This function is for interactive use (Colab, Jupyter). It displays and return a plot of (rows*columns) images from a tf.data.Dataset. Usage: ds, ds_info = tfds.load('cifar10', split='train', with_info=True) fig = tfds.show_examples(ds, ds_info) TensorFlow Datasets By using as_supervised=True, you can get a tuple (features, label) instead for supervised datasets. ds = tfds.load('mnist', split='train', as_supervised=True) ds = ds.take(1) for image, label in ds: # example is (image, label) print(image.shape, label) tensorflow tutorial begins - dataset: get to know tf.data quickly def train_input_fn( features, labels, batch_size): """An input function for training""" # Converts the input value to a dataset. dataset = tf. data. Dataset. from_tensor_slices ((dict( features), labels)) # Mixed, repeated, batch samples. dataset = dataset. shuffle (1000). repeat (). batch ( batch_size) # Return data set return dataset Custom training with tf.distribute.Strategy | TensorFlow Core Download the Fashion MNIST dataset fashion_mnist = tf.keras.datasets.fashion_mnist (train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data() # Add a dimension to the array -> new shape == (28, 28, 1) # This is done because the first layer in our model is a convolutional

How to filter Tensorflow dataset by class/label? - Kaggle Hey @bopengiowa, to filter the dataset based on class labels we need to return the labels along with the image (as tuples) in the parse_tfrecord() function. Once that is done, we could filter the required classes using the filter method of tf.data.Dataset. Finally we could drop the labels to obtain just the images, like so: TensorFlow 2.0: tf.data API - Medium But NOW there is very successful module that organize whole ETL pipeline: tf.data. I want to give brief introduction and usage of tf.data API. tf.data works with different types of input data: CSV ... TF Datasets & tf.Data for Efficient Data Pipelines - Medium Importing a dataset using tf.data is extremely simple! From a NumPy array Get your Data into two arrays, I've called them features and labels, and use the tf.data.Dataset.from_tensor_slices method for their conversion into slices. You can also make individual tf.data.Dataset objects for both, and input them separately in the model.fit function. Multi-label Text Classification with Tensorflow - Vict0rsch The labels won't require padding as they are already a consistent 2D array in the text file which will be converted to a 2D Tensor. But Tensorflow does not know it won't need to pad the labels, so we still need to specify the padded_shape argument: if need be, the Dataset should pad each sample with a 1D Tensor (hence tf.TensorShape ( [None ...

Data preprocessing using tf.keras.utils.image_dataset_from ... - Value ML If labels are "inferred," it should have subdirectories, each with photos for a certain class. The directory structure is otherwise disregarded. labels: Either "inferred" (labels are created from the directory structure), None (no labels), or a list/tuple of integer labels equal to the number of image files discovered in the directory ... Images with directories as labels for Tensorflow data 1.jpg, 2.jpg, …, n.jpg. If we want to use the Tensorflow Dataset API, there is one option of using the tf.contrib.data.Dataset.list_files and use a glob pattern. This will give us a dataset of strings for our file paths and we could then make use of tf.read_file and tf.image.decode_jpeg to map in the actual image. How to solve Multi-Label Classification Problems in Deep ... - Medium time: 7.8 s (started: 2021-01-06 09:30:04 +00:00) Notice that above, the True (Actual) Labels are encoded with Multi-hot vectors Prepare the data pipeline by setting batch size & buffer size using ... tfds.features.ClassLabel | TensorFlow Datasets get_tensor_info. View source. get_tensor_info() -> tfds.features.TensorInfo. See base class for details. get_tensor_spec. View source. get_tensor_spec() -> TreeDict[tf.TensorSpec] Returns the tf.TensorSpec of this feature (not the element spec!). Note that the output of this method may not correspond to the element spec of the dataset.

tf.data: Build Efficient TensorFlow Input Pipelines for Image Datasets 3. Build Image File List Dataset. Now we can gather the image file names and paths by traversing the images/ folders. There are two options to load file list from image directory using tf.data ...

Keras tensorflow : Get predictions and their associated ground ... - GitHub I am new to Tensorflow and Keras so the answer is perhaps simple, but I have a batched and prefetched tensorflow dataset (of type tf.data.TFRecordDataset) which consists in images and their label (int type) , and I apply a classification model on it.

tf.data: Build TensorFlow input pipelines | TensorFlow Core Jun 09, 2022 · The tf.data API enables you to build complex input pipelines from simple, reusable pieces. For example, the pipeline for an image model might aggregate data from files in a distributed file system, apply random perturbations to each image, and merge randomly selected images into a batch for training.

Multi-Label Image Classification in TensorFlow 2.0 - Medium model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=LR), loss=macro_soft_f1, metrics=[macro_f1]) Now, you can pass the training dataset of (features, labels) to fit the model and indicate a seperate dataset for validation. The performance on the validation set will be measured after each epoch.

Get labels from dataset when using tensorflow image_dataset ... - Javaer101 My images are organized in directories having the label as the name. The documentation says the function returns a tf.data.Dataset object. If label_mode is None, it yields float32 tensors of shape (batch_size, image_size [0], image_size [1], num_channels), encoding images (see below for rules regarding num_channels).

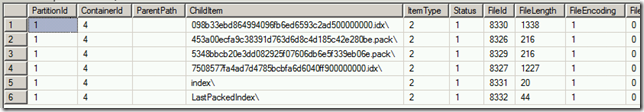

Image Dataset with TFRecord Files! | by Jelaleddin Sultanov | AI³ | Theory, Practice, Business ...

Tensorflow | tf.data.Dataset.from_tensor_slices() - GeeksforGeeks With the help of tf.data.Dataset.from_tensor_slices() method, we can get the slices of an array in the form of objects by using tf.data.Dataset.from_tensor_slices() method.. Syntax : tf.data.Dataset.from_tensor_slices(list) Return : Return the objects of sliced elements. Example #1 : In this example we can see that by using tf.data.Dataset.from_tensor_slices() method, we are able to get the ...

Datasets - TF Semantic Segmentation Documentation dataset/ labels.txt test/ images/ masks/ train/ images/ masks/ val/ images/ masks/ or use. dataset/ labels.txt images/ masks/ The labels.txt should contain a list of labels separated by newline [/n]. For instance it looks like this: background car pedestrian Create TFRecord

TFRecord and tf.train.Example | TensorFlow Core Protocol buffers are a cross-platform, cross-language library for efficient serialization of structured data. Protocol messages are defined by .proto files, these are often the easiest way to understand a message type. The tf.train.Example message (or protobuf) is a flexible message type that represents a {"string": value} mapping.

How to get the labels from tensorflow dataset - Stack Overflow How to get the labels from tensorflow dataset Ask Question 0 ds_test = tf.data.experimental.make_csv_dataset ( file_pattern = "./dfj_test/part-*.csv.gz", batch_size=batch_size, num_epochs=1, #column_names=use_cols, label_name='label_id', #select_columns= select_cols, num_parallel_reads=30, compression_type='GZIP', shuffle_buffer_size=12800)

How to convert my tf.data.dataset into image and label arrays #2499 A tf.data dataset. Should return a tuple of either (inputs, targets) or (inputs, targets, sample_weights). A generator or keras.utils.Sequence returning (inputs, targets) or (inputs, targets, sample_weights). A more detailed description of unpacking behavior for iterator types (Dataset, generator, Sequence) is given below.

python - Get labels from dataset when using tensorflow image ... Nov 04, 2020 · I am trying to add a confusion matrix, and I need to feed tensorflow.math.confusion_matrix() the test labels. My problem is that I cannot figure out how to access the labels from the dataset object created by tf.keras.preprocessing.image_dataset_from_directory() My images are organized in directories having the label as the name.

How to filter the dataset to get images from a specific class ... - GitHub Is it possible to make predicate function more generic, so that I can keep N number of classes and filter out the rest of the classes? or is there any other way to filter the dataset to get images from a specific class? Environment information. Operating System: Distribution: Anaconda; Python version: <3.7.7> Tensorflow 2.1; tensorflow_datasets ...

Loading Custom Image Dataset for Deep Learning Models: Part 1 Aug 19, 2020 · We can also convert the input data to tensors to train the model by using tf.cast() history = model.fit(x=tf.cast(np.array(img_data), tf.float64), y=tf.cast(list(map(int,target_val)),tf.int32), epochs=5) We will use the same model for further training by loading image dataset using different libraries. Loading image data using PIL

tf.data.Dataset | TensorFlow Core v2.9.1 Overview; LogicalDevice; LogicalDeviceConfiguration; PhysicalDevice; experimental_connect_to_cluster; experimental_connect_to_host; experimental_functions_run_eagerly

How to use Dataset in TensorFlow - Medium dataset = tf.data.Dataset.from_tensor_slices (x) We can also pass more than one numpy array, one classic example is when we have a couple of data divided into features and labels features, labels = (np.random.sample ( (100,2)), np.random.sample ( (100,1))) dataset = tf.data.Dataset.from_tensor_slices ( (features,labels)) From tensors

GitHub - jahongir7174/YOLOv5-tf: YOLOv5 implementation using ... Apr 27, 2021 · YOLOv5 implementation using TensorFlow 2. Train. Change data_dir, image_dir, label_dir and class_dict in config.py; Choose version in config.py; Optional, python main.py --anchor to generate anchors for your dataset and change anchors in config.py

Post a Comment for "40 tf dataset get labels"